# get rid of workspacerm(list =ls())# load packageslibrary(dplyr)library(ggplot2)library(readr)library(kableExtra)library(xpose4)library(tidyr)library(plotly)# define base pathbase_path <-paste0(here::here(), "/posts/understanding_nlme_estimation/")# define function for html table outputmytbl <-function(tbl){ tbl |>kable() |>kable_styling()}

1 Prologue

1.1 Motivation

In this (somewhat lengthy) document I want to share my attempt at understanding and reproducing NONMEM’s objective function in R. Of course you can use NONMEM effectively without knowing the exact calculations behind the objective function and I did so myself for quite a while. But I believe that it’s helpful to have some understanding of what’s happening under the hood, even if it’s just to some extent. Calculating the objective function manually and understanding the math behind the estimation has always been on my bucket list, but I never really knew where to start. After getting some very helpful input during the PharMetrX (2024) A5 module, I felt ready to give it at least a try. So, here we are!

1.2 Structure

Let me briefly outline how I structured this document. The main goal is to manually calculate the objective function in R using straight line equations. Furthermore, I would like to visualize its 3D surface to see the path the estimation algorithm is taking. I also want to reproduce two key steps associated with the estimation: The COVARIANCE step to assess the parameter precision and the POSTHOC step to get individual parameter estimates. As we try to work through these calculations, I also aim to explore and explain to myself some of the theory and intuition behind concepts and calculations along the way.

To achieve this, we will first define a simple one-compartment pharmacokinetic model with intravenous bolus administration and first-order elimination (Section 2). Afterwards we will use this model to simulate some virtual concentration-time data (Section 3), which we will then fit using the Laplacian estimation (Section 4) to obtain a reference solution. I chose the Laplacian algorithm because it makes fewer assumptions and simplifications compared to FOCE or FO. It should be easier to go from Laplacian to FOCE-I than vice versa.

Then, in the biggest part of this document, we will try to construct the equations needed to calculate the objective function value for a given set of parameters and understand why we are taking each step (Section 5). After this is done, we will implement the functions in R (Section 6), reproduce each iteration of the NONMEM run and compare the results to the reference solution.

Finally, the reward for all the hard work: We will visualize the objective function surface in a 3D plot (Section 7). This should give us a better understanding of the search algorithm and the behavior of the objective function in dependence of the parameter estimates.

After that, we will attempt a complete estimation directly in R using the optim() function (Section 8) instead of only reproducing the objective function based on the iterations NONMEM took. We will compare our R-based parameter estimates against those obtained by NONMEM and discuss any differences.

Following this, we will mimick the COVARIANCE step by retrieving and inverting the Hessian matrix (Section 9) obtained during the R-based optimization to assess parameter precision (relative standard errors). In a last step, we will then calculate the individual ETAs by reproducing the POSTHOC step (Section 10) and comparing the results against NONMEM’s outputs.

Before we get started, I want to note a few disclaimers to ward off any imposter syndrome that might kick in. I’m just a PhD student, not formally trained in mathematics or statistics, and I’m learning as I go. If you’re expecting an entirely error-free derivation with coherent statistical notation, this is not be the best resource. You better go with Wang (2007) in that case. My focus here is more about the intuition and maybe about developing a general understanding of the underlying processes.

Much of the content in this document is based on the work of others, and there’s not a lot of original thought here. I’ve relied heavily on several key publications (e.g., Wang (2007)), gained a lot of intuition from the PharMetrX (2024) A5 module, had important input from colleagues (see above) and used tools like Wolfram/Mathematica for some calculations and ChatGPT for parts of the writing. With that being said, let’s start!

2 Model definition

As written above, we will try this exercise with one of the simplest model we can think of: A one-compartment PK model with i.v. bolus and first order elimination. Additionally, we are just considering to estimate two parameters: i) the typical clearance \(\theta_{TVCL}\) and ii) the inter-individual variability on clearance \(\omega^2\). The volume of distribution \(V_D\) and the residual unexplained variability \(\sigma^2\) are assumed to be fixed and known. Dealing with only two parameters should allow us to plot the surface of the objective function value in a 3D plot and it simplifies our lives a bit. Here is a little sketch of the model:

Code

# Create the plotggplot() +# Arrow for Dose (IV Bolus) -> Central Compartmentgeom_segment(aes(x =1, y =2, xend =2, yend =2),arrow =arrow(type ="closed", length =unit(0.3, "cm")),color ="black") +geom_text(aes(x =1.5, y =2.05, label ="Dose (i.v. bolus)"), size =4) +# Arrow for Central Compartment -> Clearance (CL + IIV)geom_segment(aes(x =3, y =2, xend =5, yend =2),arrow =arrow(type ="closed", length =unit(0.3, "cm")),color ="black") +geom_text(aes(x =4.5, y =2.05, label ="Clearance (+ IIV)"), size =4) +# Central Compartment (rectangle)geom_rect(aes(xmin =2, xmax =4, ymin =1.70, ymax =2.30),fill ="white", color ="black", size =1.5) +geom_text(aes(x =3, y =2, label ="Central Compartment"), size =5) +geom_text(aes(x =3.85, y =1.75, label ="VD"), size =4) +# Set limits and themexlim(1, 5) +ylim(1.5, 2.5) +theme_void() +theme(panel.grid =element_blank(),plot.margin =unit(c(0,0,0,0), "cm"),plot.background =element_rect(fill ="transparent", color =NA) )

Figure 1: Schematic of a simple, one-compartment pharmacokinetic model with intravenous bolus administration. The model includes clearance with inter-individual variability, and a fixed volume of distribution.

The first step is to define this model structure with some dummy parameter values inside NONMEM, which we will do now.

2.1 Model code

The NONMEM model code for our simple one-compartment PK model is shown below. Please note that we are using the ADVAN1 routine, which relies on the analytical expression for a one-compartment model, rather than an ODE solver.

1cmt_iv_sim.mod

# read_file(paste0(base_path, "/models/simulation/1cmt_iv_sim.mod"))$PROBLEM 1cmt_iv_sim$INPUT ID TIME EVID AMT RATE DV MDV$DATA C:\Users\mklose\Desktop\G\Mitarbeiter\Klose\Miscellaneous\NLME_reproduction_R\data\input_for_sim\input_data.csv IGNORE=@$SUBROUTINES ADVAN1 TRANS2$PK; define fixed effects parametersCL =THETA(1) *EXP(ETA(1))V =THETA(2); scalingS1=V$THETA(0, 0.2, 1) ; 1 TVCL3.15 FIX ; 2 TVV$OMEGA 0.2 ; 1 OM_CL$SIGMA0.1 FIX ; 1 SIG_ADD$ERROR ; add additive errorY = F +EPS(1); store error for table outputERR1 =EPS(1); $ESTIMATION METHOD=COND LAPLACIAN MAXEVAL=9999 SIGDIG=3 PRINT=1 NOABORT POSTHOC$SIMULATION (12345678) ONLYSIM$TABLE ID TIME EVID AMT RATE DV MDV ETA1 ERR1 NOAPPEND ONEHEADER NOPRINT FILE=sim_out

Depending on the task (simulation vs. estimation), we’ll adjust the model code slightly. For simulation, we use $SIMULATION (12345678) ONLYSIM and for estimation, we are going to use $ESTIMATION METHOD=COND LAPLACIAN MAXEVAL=9999 SIGDIG=3 PRINT=1 NOABORT POSTHOC. Additionally, the $DATA block will vary depending on the task. For simulation, we will just pass a dosing and sampling scheme. For estimation, we’ll then use the simulated data from the first step and use the simulated concentration values for parameter estimation.

3 Data simulation

Okay, so far so good. We have our model in NONMEM and we also defined some dummy values for the parameter estimates. Now we want to simulate some virtual concentration-time data that will be used for model fitting later on. Typically you would have clinical data at hand, but we are just generating ourselves some clinical data to use for this exercise. We begin by constructing an input dataset that represents our dosing and sampling scheme, then simulate the concentration-time profiles, and finally visualize the pharmacokinetics to get a first understanding of the data.

3.1 Input dataset generation

To simulate data, we consider a scenario with 10 individuals, each having five observations at different time points within 24 hours. The selected time points are 0.01, 3, 6, 12, and 24 hours. To my understanding, it is crucial to have at least two observations per individual to reliably estimate inter-individual variability, as having only one observation per individual would make it impossible to distinguish between inter-individual variability and residual variability.

The dataset includes dosing records (EVID = 1) and observation records (EVID = 0). For now the dependent variable (DV) will be flagged to -99 since we will simulate these values in the following steps. Here is how our input dataset looks like:

Code

# generate NONMEM input dataset for n individuals with 3 observations at different timepointsn_ind <-10n_obs <-5timepoints <-c(0.01, 3, 6, 12, 24)# observation events (EVID == 0)obs_input <-tibble(ID =rep(1:n_ind, each = n_obs),TIME =rep(timepoints, n_ind),EVID =0,AMT =0,RATE =0,DV =-99, # DV is what we want to simulateMDV =0)# dosing events (EVID == 1)dosing_input <-tibble(ID =rep(1:n_ind, each =1),TIME =0,EVID =1,AMT =100,RATE =0,DV =0,MDV =1)# bind together and sort by ID and TIMEinput_data <-bind_rows(obs_input, dosing_input) |>arrange(ID, TIME)# show input datainput_data |>head(n=10) |>mytbl()

ID

TIME

EVID

AMT

RATE

DV

MDV

1

0.00

1

100

0

0

1

1

0.01

0

0

0

-99

0

1

3.00

0

0

0

-99

0

1

6.00

0

0

0

-99

0

1

12.00

0

0

0

-99

0

1

24.00

0

0

0

-99

0

2

0.00

1

100

0

0

1

2

0.01

0

0

0

-99

0

2

3.00

0

0

0

-99

0

2

6.00

0

0

0

-99

0

Code

# save data to filewrite_csv(input_data, paste0(base_path, "data/input_for_sim/input_data.csv"))# write_csv(input_data, "C:\\Users\\mklose\\Desktop\\G\\Mitarbeiter\\Klose\\Miscellaneous\\NLME_reproduction_R\\data\\input_for_sim\\input_data.csv")

We arbitrarily decided that each individual receives the same dose of 100 mg at time 0. Same dose fits all, right? We can see that at 0.01, 3, 6, 12, and 24 hours after dosing we encoded sampling events (EVID = 0). We can now plug this dataset into NONMEM and simulate these concentrations!

3.2 Simulation

With the input dataset generated, the next step is to use it to simulate virtual concentration-time data. This simulation is performed using NONMEM, using the dosing and sampling dataset defined above. This step happens in NONMEM itself and will be be executed separately from this R session.

3.2.1 Read in simulated data

After running the simulation in NONMEM, the generated output (in our case a file called sim_out) contains the simulated concentration values within the DV column. The simulated dataset also includes additional columns such as ETA1, which represents the realization of inter-individual variability, and ERR1, which represents the realization of residual unexplained variability (RUV). So for each individual we have drawn one ETA1 and for each observation we have one ERR1.

We can see that we obtained a dataset with the simulated concentration values in the DV column. The simulated data now needs to be saved as .csv in order to use it in the subsequent steps within NONMEM.

Code

# subset dataframesim_data_reduced <- sim_data |>select(ID, TIME, EVID, AMT, RATE, DV, MDV)# save dataframewrite_csv(sim_data_reduced, paste0(base_path, "data/output_from_sim/sim_data.csv"))# filter to have EVID == 0 onlysim_data_reduced <- sim_data_reduced |>filter(EVID ==0)

Great! The sim_data.csv dataframe was successfully saved and we can later use it for the generation of the reference solution in NONMEM.

3.3 Concentration-time profiles

Now we can visualize the simulated data stratified by individual to get a feeling for our virtual clinical data.

Code

# show individual profilessim_data |>filter(EVID ==0) |>ggplot(aes(x=TIME, y=DV, group=ID, color=as.factor(ID))) +geom_point()+geom_line()+theme_bw()+scale_y_continuous(limits=c(0,NA))+labs(x="Time after last dose [h]", y="Concentration [mg/L]")+ggtitle("Simulated data")+scale_color_discrete("Individual")

Figure 2: Simulated concentration-time profiles for 10 individuals.

As each of the 10 individuals received the same dose, we can clearly see the impact of variability on clearance on concentration-time profiles. Additionally, the single data points are influenced by the residual unexplained variability, which adds noise to the readouts.

We are making progress! In a next step we now want to generate a reference solution for the estimation within NONMEM. By doing so we can (in the end) compare our own implementation of the objective function to the one NONMEM uses.

4 NONMEM estimation (reference solution)

The idea is now to use the virtual clinical data we have generated in the last step to perform an estimation step using the Laplacian estimation algorithm in NONMEM. This will serve as some kind of reference solution, so we are able to compare the results of our own implementation with the NONMEM output. We are going to use the same model which was used to simulate the data, but this time we are using it for a estimation problem. Therefore, we are going to change the $ESTIMATION block in the model code (see Section 2.1) to: $ESTIMATION METHOD=COND LAPLACIAN MAXEVAL=9999 SIGDIG=3 PRINT=1 NOABORT POSTHOC.

4.1 Estimation

As mentioned before, the actual estimation will again happen in NONMEM and therefore needs to be executed separately from this R session. In this step, we are just going to read in the NONMEM output files and visualize them appropriately. This is going to happen as a next step.

4.2 NONMEM output files

4.2.1 .lst file

First of all, we can read in the .lst file. It contains many information about the parameter estimation process and is a nice way to get a quick overview of the model run.

Mon 12/30/202410:54 PM$PROBLEM 1cmt_iv_est$INPUT ID TIME EVID AMT RATE DV MDV$DATA sim_data.csv IGNORE=@$SUBROUTINE ADVAN1 TRANS2$PK; define fixed effects parametersCL =THETA(1) *EXP(ETA(1))V =THETA(2); scalingS1=V$THETA (0,0.1,1) ; 1 TVCL3.15 FIX ; 2 TVV$OMEGA 0.15 ; 1 OM_CL$SIGMA 0.1 FIX ; 1 SIG_ADD$ERROR ; add additive errorY = F +EPS(1); store error for table outputERR1 =EPS(1)$ESTIMATION METHOD=COND LAPLACIAN MAXEVAL=9999 SIGDIG=3 PRINT=1 NOABORT POSTHOC$COVARIANCE PRINT=E$TABLE ID TIME EVID AMT RATE DV MDV ETA1 ERR1 CL NOAPPEND ONEHEADER NOPRINT FILE=estim_outNM-TRAN MESSAGES WARNINGS AND ERRORS (IF ANY) FOR PROBLEM 1 (WARNING 2) NM-TRAN INFERS THAT THE DATA ARE POPULATION.License Registered to: Freie Universitaet Berlin Department of Clinical Pharmacy BiochemistryExpiration Date:14 JAN 2025Current Date:30 DEC 2024**** WARNING!!! Days until program expires :19******** CONTACT idssoftware@iconplc.com FOR RENEWAL ****1NONLINEAR MIXED EFFECTS MODEL PROGRAM (NONMEM) VERSION 7.5.1 ORIGINALLY DEVELOPED BY STUART BEAL, LEWIS SHEINER, AND ALISON BOECKMANN CURRENT DEVELOPERS ARE ROBERT BAUER, ICON DEVELOPMENT SOLUTIONS, AND ALISON BOECKMANN. IMPLEMENTATION, EFFICIENCY, AND STANDARDIZATION PERFORMED BY NOUS INFOSYSTEMS. PROBLEM NO.:11cmt_iv_est0DATA CHECKOUT RUN: NO DATA SET LOCATED ON UNIT NO.:2 THIS UNIT TO BE REWOUND: NO NO. OF DATA RECS IN DATA SET:60 NO. OF DATA ITEMS IN DATA SET:7 ID DATA ITEM IS DATA ITEM NO.:1 DEP VARIABLE IS DATA ITEM NO.:6 MDV DATA ITEM IS DATA ITEM NO.:70INDICES PASSED TO SUBROUTINE PRED:324500000000LABELS FOR DATA ITEMS: ID TIME EVID AMT RATE DV MDV0(NONBLANK) LABELS FOR PRED-DEFINED ITEMS: CL ERR10FORMAT FOR DATA: (7E8.0) TOT. NO. OF OBS RECS:50 TOT. NO. OF INDIVIDUALS:100LENGTH OF THETA:20DEFAULT THETA BOUNDARY TEST OMITTED: NO0OMEGA HAS SIMPLE DIAGONAL FORM WITH DIMENSION:10DEFAULT OMEGA BOUNDARY TEST OMITTED: NO0SIGMA HAS SIMPLE DIAGONAL FORM WITH DIMENSION:10DEFAULT SIGMA BOUNDARY TEST OMITTED: NO0INITIAL ESTIMATE OF THETA: LOWER BOUND INITIAL EST UPPER BOUND0.0000E+000.1000E+000.1000E+010.3150E+010.3150E+010.3150E+010INITIAL ESTIMATE OF OMEGA:0.1500E+000INITIAL ESTIMATE OF SIGMA:0.1000E+000SIGMA CONSTRAINED TO BE THIS INITIAL ESTIMATE0COVARIANCE STEP OMITTED: NO EIGENVLS. PRINTED: YES SPECIAL COMPUTATION: NO COMPRESSED FORMAT: NO GRADIENT METHOD USED: NOSLOW SIGDIGITS ETAHAT (SIGLO):-1 SIGDIGITS GRADIENTS (SIGL):-1 EXCLUDE COV FOR FOCE (NOFCOV): NO Cholesky Transposition of R Matrix (CHOLROFF):0 KNUTHSUMOFF:-1 RESUME COV ANALYSIS (RESUME): NO SIR SAMPLE SIZE (SIRSAMPLE): NON-LINEARLY TRANSFORM THETAS DURING COV (THBND):1 PRECONDTIONING CYCLES (PRECOND):0 PRECONDTIONING TYPES (PRECONDS): TOS FORCED PRECONDTIONING CYCLES (PFCOND):0 PRECONDTIONING TYPE (PRETYPE):0 FORCED POS. DEFINITE SETTING DURING PRECONDITIONING: (FPOSDEF):0 SIMPLE POS. DEFINITE SETTING: (POSDEF):-10TABLES STEP OMITTED: NO NO. OF TABLES:1 SEED NUMBER (SEED):11456 NPDTYPE:0 INTERPTYPE:0 RANMETHOD:3U MC SAMPLES (ESAMPLE):300 WRES SQUARE ROOT TYPE (WRESCHOL): EIGENVALUE0-- TABLE 1--0RECORDS ONLY: ALL04 COLUMNS APPENDED: NO PRINTED: NO HEADER: YES FILE TO BE FORWARDED: NO FORMAT: S1PE11.4 IDFORMAT: LFORMAT: RFORMAT: FIXED_EFFECT_ETAS:0USER-CHOSEN ITEMS: ID TIME EVID AMT RATE DV MDV ETA1 ERR1 CL1DOUBLE PRECISION PREDPP VERSION 7.5.1 ONE COMPARTMENT MODEL (ADVAN1)0MAXIMUM NO. OF BASIC PK PARAMETERS:20BASIC PK PARAMETERS (AFTER TRANSLATION): ELIMINATION RATE (K) IS BASIC PK PARAMETER NO.:1 TRANSLATOR WILL CONVERT PARAMETERSCLEARANCE (CL) AND VOLUME (V) TO K (TRANS2)0COMPARTMENT ATTRIBUTES COMPT. NO. FUNCTION INITIAL ON/OFF DOSE DEFAULT DEFAULT STATUS ALLOWED ALLOWED FOR DOSE FOR OBS.1 CENTRAL ON NO YES YES YES2 OUTPUT OFF YES NO NO NO1 ADDITIONAL PK PARAMETERS - ASSIGNMENT OF ROWS IN GG COMPT. NO. INDICES SCALE BIOAVAIL. ZERO-ORDER ZERO-ORDER ABSORB FRACTION RATE DURATION LAG13****2*----- PARAMETER IS NOT ALLOWED FOR THIS MODEL* PARAMETER IS NOT SUPPLIED BY PK SUBROUTINE; WILL DEFAULT TO ONE IF APPLICABLE0DATA ITEM INDICES USED BY PRED ARE: EVENT ID DATA ITEM IS DATA ITEM NO.:3 TIME DATA ITEM IS DATA ITEM NO.:2 DOSE AMOUNT DATA ITEM IS DATA ITEM NO.:4 DOSE RATE DATA ITEM IS DATA ITEM NO.:50PK SUBROUTINE CALLED WITH EVERY EVENT RECORD. PK SUBROUTINE NOT CALLED AT NONEVENT (ADDITIONAL OR LAGGED) DOSE TIMES.0ERROR SUBROUTINE CALLED WITH EVERY EVENT RECORD.1#TBLN: 1#METH: Laplacian Conditional Estimation ESTIMATION STEP OMITTED: NO ANALYSIS TYPE: POPULATION NUMBER OF SADDLE POINT RESET ITERATIONS:0 GRADIENT METHOD USED: NOSLOW CONDITIONAL ESTIMATES USED: YES CENTERED ETA: NO EPS-ETA INTERACTION: NO LAPLACIAN OBJ. FUNC.: YES NUMERICAL 2ND DERIVATIVES: NO NO. OF FUNCT. EVALS. ALLOWED:9999 NO. OF SIG. FIGURES REQUIRED:3 INTERMEDIATE PRINTOUT: YES ESTIMATE OUTPUT TO MSF: NO ABORT WITH PRED EXIT CODE 1: NO IND. OBJ. FUNC. VALUES SORTED: NO NUMERICAL DERIVATIVE FILE REQUEST (NUMDER): NONEMAP (ETAHAT) ESTIMATION METHOD (OPTMAP):0 ETA HESSIAN EVALUATION METHOD (ETADER):0 INITIAL ETA FOR MAP ESTIMATION (MCETA):0 SIGDIGITS FOR MAP ESTIMATION (SIGLO):100 GRADIENT SIGDIGITS OF FIXED EFFECTS PARAMETERS (SIGL):100 NOPRIOR SETTING (NOPRIOR):0 NOCOV SETTING (NOCOV): OFF DERCONT SETTING (DERCONT): OFF FINAL ETA RE-EVALUATION (FNLETA):1 EXCLUDE NON-INFLUENTIAL (NON-INFL.) ETAS IN SHRINKAGE (ETASTYPE): NO NON-INFL. ETA CORRECTION (NONINFETA):0 RAW OUTPUT FILE (FILE): psn.ext EXCLUDE TITLE (NOTITLE): NO EXCLUDE COLUMN LABELS (NOLABEL): NO FORMAT FOR ADDITIONAL FILES (FORMAT): S1PE12.5 PARAMETER ORDER FOR OUTPUTS (ORDER): TSOL KNUTHSUMOFF:0 INCLUDE LNTWOPI: NO INCLUDE CONSTANT TERM TO PRIOR (PRIORC): NO INCLUDE CONSTANT TERM TO OMEGA (ETA) (OLNTWOPI):NO ADDITIONAL CONVERGENCE TEST (CTYPE=4)?: NO EM OR BAYESIAN METHOD USED: NONE THE FOLLOWING LABELS ARE EQUIVALENT PRED=NPRED RES=NRES WRES=NWRES IWRS=NIWRES IPRD=NIPRED IRS=NIRES MONITORING OF SEARCH:0ITERATION NO.:0 OBJECTIVE VALUE:41.8454368139310 NO. OF FUNC. EVALS.:4 CUMULATIVE NO. OF FUNC. EVALS.:4 NPARAMETR:1.0000E-011.5000E-01 PARAMETER:1.0000E-011.0000E-01 GRADIENT:-1.0545E+02-1.0294E+020ITERATION NO.:1 OBJECTIVE VALUE:-2.74307593433339 NO. OF FUNC. EVALS.:5 CUMULATIVE NO. OF FUNC. EVALS.:9 NPARAMETR:1.6837E-014.8397E-01 PARAMETER:7.0000E-016.8569E-01 GRADIENT:2.8868E+009.4212E+000ITERATION NO.:2 OBJECTIVE VALUE:-3.06819849357734 NO. OF FUNC. EVALS.:8 CUMULATIVE NO. OF FUNC. EVALS.:17 NPARAMETR:1.6369E-013.8814E-01 PARAMETER:6.6620E-015.7537E-01 GRADIENT:-2.2554E+005.6641E+000ITERATION NO.:3 OBJECTIVE VALUE:-11.2971148236422 NO. OF FUNC. EVALS.:5 CUMULATIVE NO. OF FUNC. EVALS.:22 NPARAMETR:2.1655E-011.4655E-01 PARAMETER:1.0114E+008.8367E-02 GRADIENT:6.6259E+002.6609E+000ITERATION NO.:4 OBJECTIVE VALUE:-11.2971148236422 NO. OF FUNC. EVALS.:10 CUMULATIVE NO. OF FUNC. EVALS.:32 NPARAMETR:2.1655E-011.4655E-01 PARAMETER:1.0114E+008.8367E-02 GRADIENT:-1.3899E+012.6592E+000ITERATION NO.:5 OBJECTIVE VALUE:-12.4187275503686 NO. OF FUNC. EVALS.:7 CUMULATIVE NO. OF FUNC. EVALS.:39 NPARAMETR:2.5349E-018.6243E-02 PARAMETER:1.2172E+00-1.7673E-01 GRADIENT:4.7000E+00-5.6454E+000ITERATION NO.:6 OBJECTIVE VALUE:-12.7891181563899 NO. OF FUNC. EVALS.:7 CUMULATIVE NO. OF FUNC. EVALS.:46 NPARAMETR:2.4329E-011.1715E-01 PARAMETER:1.1625E+00-2.3573E-02 GRADIENT:-1.7854E+001.1790E+000ITERATION NO.:7 OBJECTIVE VALUE:-12.8236151522707 NO. OF FUNC. EVALS.:7 CUMULATIVE NO. OF FUNC. EVALS.:53 NPARAMETR:2.4614E-011.1120E-01 PARAMETER:1.1779E+00-4.9675E-02 GRADIENT:-2.9776E-012.0940E-010ITERATION NO.:8 OBJECTIVE VALUE:-12.8245888793110 NO. OF FUNC. EVALS.:7 CUMULATIVE NO. OF FUNC. EVALS.:60 NPARAMETR:2.4672E-011.0995E-01 PARAMETER:1.1810E+00-5.5291E-02 GRADIENT:1.8561E-02-1.2024E-020ITERATION NO.:9 OBJECTIVE VALUE:-12.8245933812655 NO. OF FUNC. EVALS.:7 CUMULATIVE NO. OF FUNC. EVALS.:67 NPARAMETR:2.4668E-011.1002E-01 PARAMETER:1.1808E+00-5.4985E-02 GRADIENT:-1.3169E-041.3509E-040ITERATION NO.:10 OBJECTIVE VALUE:-12.8245933812655 NO. OF FUNC. EVALS.:4 CUMULATIVE NO. OF FUNC. EVALS.:71 NPARAMETR:2.4668E-011.1002E-01 PARAMETER:1.1808E+00-5.4985E-02 GRADIENT:-1.3169E-041.3509E-04#TERM:0MINIMIZATION SUCCESSFUL NO. OF FUNCTION EVALUATIONS USED:71 NO. OF SIG. DIGITS IN FINAL EST.:4.5 ETABAR IS THE ARITHMETIC MEAN OF THE ETA-ESTIMATES, AND THE P-VALUE IS GIVEN FOR THE NULL HYPOTHESIS THAT THE TRUE MEAN IS 0. ETABAR:-1.8954E-05 SE:1.0473E-01 N:10 P VAL.:9.9986E-01ETASHRINKSD(%) 1.5107E-01ETASHRINKVR(%) 3.0192E-01EBVSHRINKSD(%) 1.3728E-01EBVSHRINKVR(%) 2.7437E-01RELATIVEINF(%) 9.9726E+01EPSSHRINKSD(%) 1.8336E+01EPSSHRINKVR(%) 3.3311E+01 TOTAL DATA POINTS NORMALLY DISTRIBUTED (N):50 N*LOG(2PI) CONSTANT TO OBJECTIVE FUNCTION:91.893853320467272 OBJECTIVE FUNCTION VALUE WITHOUT CONSTANT:-12.824593381265490 OBJECTIVE FUNCTION VALUE WITH CONSTANT:79.069259939201785 REPORTED OBJECTIVE FUNCTION DOES NOT CONTAIN CONSTANT TOTAL EFFECTIVE ETAS (NIND*NETA):10#TERE: Elapsed estimation time in seconds:0.02 Elapsed covariance time in seconds:0.00 Elapsed postprocess time in seconds:0.001************************************************************************************************************************************************************************************ LAPLACIAN CONDITIONAL ESTIMATION ********************#OBJT:************** MINIMUM VALUE OF OBJECTIVE FUNCTION ************************************************************************************************************************************************************************************#OBJV:******************************************** -12.825 **************************************************1************************************************************************************************************************************************************************************ LAPLACIAN CONDITIONAL ESTIMATION **************************************** FINAL PARAMETER ESTIMATE ************************************************************************************************************************************************************************************ THETA - VECTOR OF FIXED EFFECTS PARAMETERS ********* TH 1 TH 22.47E-013.15E+00 OMEGA - COV MATRIX FOR RANDOM EFFECTS - ETAS ******** ETA1 ETA1+1.10E-01 SIGMA - COV MATRIX FOR RANDOM EFFECTS - EPSILONS **** EPS1 EPS1+1.00E-011 OMEGA - CORR MATRIX FOR RANDOM EFFECTS - ETAS ******* ETA1 ETA1+3.32E-01 SIGMA - CORR MATRIX FOR RANDOM EFFECTS - EPSILONS *** EPS1 EPS1+3.16E-011************************************************************************************************************************************************************************************ LAPLACIAN CONDITIONAL ESTIMATION **************************************** STANDARD ERROR OF ESTIMATE ************************************************************************************************************************************************************************************ THETA - VECTOR OF FIXED EFFECTS PARAMETERS ********* TH 1 TH 22.59E-02 ......... OMEGA - COV MATRIX FOR RANDOM EFFECTS - ETAS ******** ETA1 ETA1+4.87E-02 SIGMA - COV MATRIX FOR RANDOM EFFECTS - EPSILONS **** EPS1 EPS1+ .........1 OMEGA - CORR MATRIX FOR RANDOM EFFECTS - ETAS ******* ETA1 ETA1+7.35E-02 SIGMA - CORR MATRIX FOR RANDOM EFFECTS - EPSILONS *** EPS1 EPS1+ .........1************************************************************************************************************************************************************************************ LAPLACIAN CONDITIONAL ESTIMATION **************************************** COVARIANCE MATRIX OF ESTIMATE ************************************************************************************************************************************************************************************ TH 1 TH 2 OM11 SG11 TH 1+6.72E-04 TH 2+ ......... ......... OM11+7.98E-04 ......... 2.37E-03 SG11+ ......... ......... ......... .........1************************************************************************************************************************************************************************************ LAPLACIAN CONDITIONAL ESTIMATION **************************************** CORRELATION MATRIX OF ESTIMATE ************************************************************************************************************************************************************************************ TH 1 TH 2 OM11 SG11 TH 1+2.59E-02 TH 2+ ......... ......... OM11+6.32E-01 ......... 4.87E-02 SG11+ ......... ......... ......... .........1************************************************************************************************************************************************************************************ LAPLACIAN CONDITIONAL ESTIMATION **************************************** INVERSE COVARIANCE MATRIX OF ESTIMATE ************************************************************************************************************************************************************************************ TH 1 TH 2 OM11 SG11 TH 1+2.48E+03 TH 2+ ......... ......... OM11+-8.32E+02 ......... 7.01E+02 SG11+ ......... ......... ......... .........1************************************************************************************************************************************************************************************ LAPLACIAN CONDITIONAL ESTIMATION **************************************** EIGENVALUES OF COR MATRIX OF ESTIMATE ************************************************************************************************************************************************************************************123.68E-011.63E+00 Elapsed finaloutput time in seconds:0.01#CPUT: Total CPU Time in Seconds, 0.016Stop Time:Mon 12/30/202410:54 PM

It is still quite a long file and a lot of text, that’s why the output is collapsed.

4.2.2 PSN sumo (run summary)

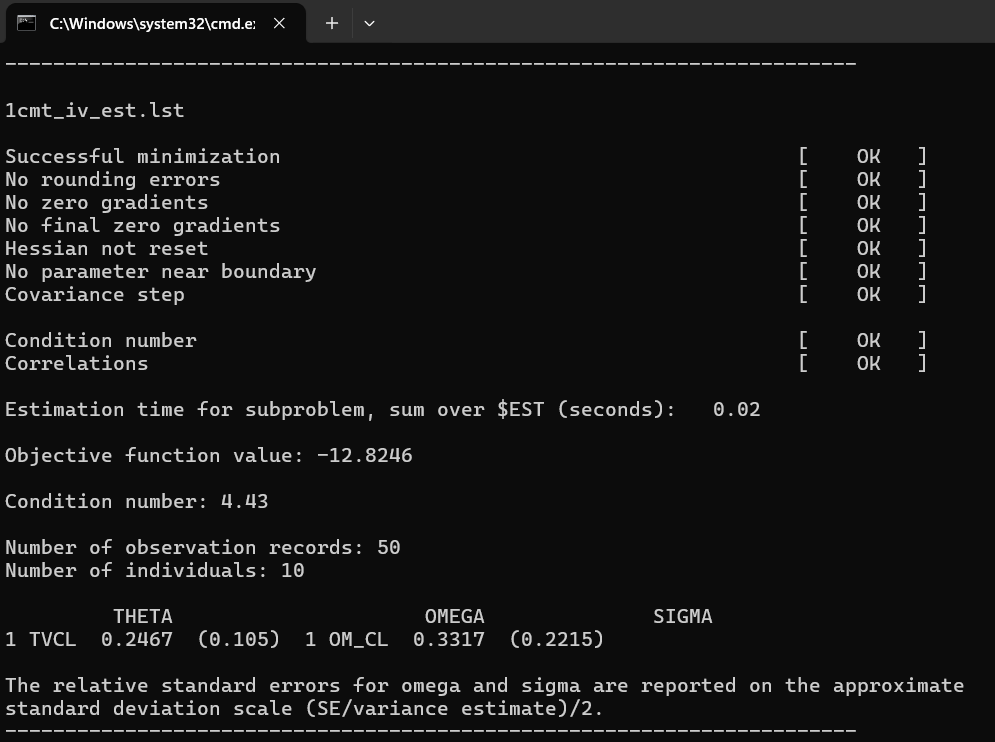

As a next step we can run the PsN sumo command to get a quick run summary. It tells us if the minimization was successful, if there has been any rounding errors, zero gradients, and so on.

Figure 3: PsN sumo output summarizing the minimization process.

In this screenshot we can also already see our maximum likelihood estimates for THETA and OMEGA and their associated relative standard errors.

4.2.3 Iteration information

But which steps did the NONMEM algorithm take to end up in the maximum likelihood estimate? We can read in the .ext file, which contains information about the iterations of the estimation process and also the objective function value at each iteration. Here is how it looks like:

Code

# Read the data, skipping the first lineext_file <-read_table(paste0(base_path, "models/estimation/1cmt_iv_est.ext"), skip =1)# keep only positive iterationsext_file <- ext_file |>filter(ITERATION >=0)# rename columnsext_file <- ext_file |>rename(CL ="THETA1",V ="THETA2",RUV_VAR ="SIGMA(1,1)",IIV_VAR ="OMEGA(1,1)" )# Show the tibbleext_file |>head(n=10) |>mytbl()

ITERATION

CL

V

RUV_VAR

IIV_VAR

OBJ

0

0.100000

3.15

0.1

0.1500000

41.845437

1

0.168370

3.15

0.1

0.4839690

-2.743076

2

0.163689

3.15

0.1

0.3881430

-3.068198

3

0.216552

3.15

0.1

0.1465500

-11.297115

4

0.216552

3.15

0.1

0.1465500

-11.297115

5

0.253493

3.15

0.1

0.0862428

-12.418728

6

0.243287

3.15

0.1

0.1171540

-12.789118

7

0.246139

3.15

0.1

0.1111950

-12.823615

8

0.246715

3.15

0.1

0.1099530

-12.824589

9

0.246682

3.15

0.1

0.1100200

-12.824593

In total, we have 10 entries/rows in the .ext file. The first row, iteration 0, contains the initial estimates (which we have provided in the model code) and its associated objective function value. Then we have iterations 1-8, which are intermediate steps. Finally, we are going to end up in iteration 9, at which point the convergence criterium was fulfilled and the estimation process has ended.

The columns carry the following information:

ITERATION = iteration number

CL = Typical value of clearance in the population

V = Volume of distribution (fixed)

RUV_VAR = Variance of the residual unexplained variability (fixed)

IIV_VAR = Variance of the inter-individual variability

OBJ = Objective function value

We can use this output file to visualize the change in parameter values and objective function value over the iteration number, so we get a better understanding what is going on during the estimation steps:

Figure 4: Iterative diagnostics of the NONMEM estimation process, showing the progression of clearance, inter-individual variability, and objective function values over 10 iterations. Fixed parameters (RUV_VAR, V) remain constant.

In this exercise, the reproduction of the objective function value for a given set of parameters will be the main goal and this .ext file provides us with the reference solution. I actually don’t want to go down the rabbit hole of trying to reproduce the math behind these optimization algorithms. However, in Section 8, we are going to at least use the optim function in R to partly reproduce the search algorithm.

In the next big chapter we will try to understand the theory behind calculating the log-likelihood needed for the objective function based on our simple example.

5 NLME theory and objective function

5.1 Statistical model

In our little example we assume to have a (simple) hierarchical nonlinear mixed-effects (NLME) model, for which we want to conduct the parameter estimation. To my understanding the hierarchical structure is given by having variability defined on a population (=parameter) level and an individual (=observation) level, while the individual level depends on the parameter level. Let’s have a closer look to both of these levels.

5.1.1 Population (parameter) level

The population level is represented by an inter-individual variability (IIV) term, which assumes a log-normal distribution around a typical parameter value. In this simplified example we only consider IIV on clearance and do not consider any other random effects. The population (or parameter) level can be defined as follows:

Here, the individual clearance (\(CL_i\)) is modeled as a log-normally distributed parameter, where (\(\theta_{\text{TVCL}}\)) is the typical clearance value and \(\eta_{i}\) is a random effect accounting for inter-individual variability (IIV). We assume that this random effect follows a normal distribution with mean zero and variance \(\omega^2\). An example plot of such a population level is shown below:

Code

# Set seed for reproducibilityset.seed(123)# Define population parameterstheta_TVCL <-10# Typical clearance (L/h)omega_sq <-0.2# Variance of IIV on clearanceomega <-sqrt(omega_sq) # Standard deviation of IIVnum_individuals <-5000# Number of individuals in the population# Simulate individual random effectseta_i <-rnorm(num_individuals, mean =0, sd = omega)# Compute individual clearancesCL_i <- theta_TVCL *exp(eta_i)# Create a data frame for plottingpopulation_data <-data.frame(Individual =1:num_individuals,eta_i = eta_i,CL = CL_i)# Calculate density for annotation placementdensity_CL <-density(CL_i)max_density <-max(density_CL$y)# Create the ggplotp_pop <-ggplot(population_data, aes(x = CL)) +# Histogram of clearance valuesgeom_histogram(aes(y = ..density..), binwidth =0.5, fill ="lightgreen", color ="black", alpha =0.7) +# Density curvegeom_density(color ="darkgreen", size =1) +# Vertical line for typical clearancegeom_vline(xintercept = theta_TVCL, color ="blue", linetype ="dashed", size =1) +# Labels and themelabs(title ="Population level: Clearance",x ="Clearance (L/h)",y ="Density" ) +theme_bw() +# Annotation for Typical Clearanceannotate("text", x = theta_TVCL +5, y = max_density *0.9,label =expression(theta[TVCL]~": Typical Clearance"),color ="blue", hjust =0) +geom_segment(aes(x = theta_TVCL +5, y = max_density *0.9,xend = theta_TVCL, yend = max_density *0.8),arrow =arrow(length =unit(0.2, "cm")), color ="blue") +# Annotation for IIVannotate("text", x = theta_TVCL +13, y = max_density *0.6,label =expression(IIV~"("~omega^2~")"),color ="darkgreen", hjust =0.5) +geom_segment(aes(x = theta_TVCL +10, y = max_density *0.6,xend = theta_TVCL+3, yend = max_density *0.55),arrow =arrow(length =unit(0.2, "cm")), color ="darkgreen")# Display the plotprint(p_pop)

Figure 5: Distribution of clearance values at the population level, modeled as a log-normal distribution. The dashed blue line indicates the typical clearance value , while the green histogram and curve represent the density of clearance values in the population, accounting for inter-individual variability.

This plot represents the population level of our model, where the clearance values are sampled from a log-normal distribution around the typical clearance value. The dashed line represents the typical clearance value \(\theta_{TVCL}\), and the green curve/bars represents the distribution of clearances in the population.

The random effect \(\eta_i\) itself follows a normal distribution \(N(0, \omega^2)\) and is visualized below:

Figure 6: Distribution of ETA values at the population level, following a normal distribution centered around 0. The dashed line indicates 0.

5.1.2 Individual (observation) level

The individual level on the other hand is defined by the observed and predicted concentrations for each subject. The predictions are based on the structural model and dependent on the individual parameters (which can be treated as a random variable, in our case CL). The individual level also incorporates residual unexplained variability (RUV), which distribution tells us how to define the likelihood function in the end. The individual level can be defined by:

\[Y_{ij} \mid CL_i = f(x_{ij}; CL_i) + \epsilon_{ij},~~~~~\epsilon_{ij} \sim N(0, \sigma^2) \tag{2}\] where we can note that:

\(Y_{ij}\) is the observed concentration for the \(i^{th}\) individual at the \(j^{th}\) time point, conditionally distributed given the individual’s clearance \(CL_i\).

\(f(x_{ij}; CL_i)\) is the predicted concentration. It depends on \(CL_i\) (the individual clearance) and \(x_{ij}\) (all the information about covariates, dosing and sampling events for the \(i^{th}\) individual at the \(j^{th}\) time point).

\(\epsilon_{ij}\) is the realization of the residual unexplained variability for the \(i^{th}\) individual at the \(j^{th}\) time point. It typically follows a normal distribution \(N(0, \sigma^2)\)

In our example we have two random variables, \(Y_{ij}\) and \(CL_i\), with parameters \(\beta := (\theta_{TVCL}, \omega^2, \sigma^2)\). In our example we just want to estimate the typical clearance \(\theta_{TVCL}\) and the IIV on clearance \(\omega^2\). The residual unexplained variability \(\sigma^2\) is assumed to be known and fixed. The individual level with RUV is illustrated below:

Code

# Set seed for reproducibilityset.seed(123)# Define model parameterstheta_TVCL <-10# Typical clearance (L/h)omega <-0.447# IIV on clearance (sqrt(omega^2) where omega^2 = 0.2)sigma <-0.5# Residual unexplained variability (standard deviation)V <-20# Volume of distribution (L)Dose <-100# Dose administered (mg)# Simulate data for one individualindividual_id <-1eta_i <-rnorm(1, mean =0, sd = omega) # Individual random effectCL_i <- theta_TVCL *exp(eta_i) # Individual clearance# Define time pointstime <-seq(0, 10, by =1) # From 0 to 10 hours# Compute predicted concentrations based on the 1 cmt modelC_pred <- (Dose / V) *exp(- (CL_i / V) * time)# Add residual unexplained variabilityepsilon_ij <-rnorm(length(time), mean =0, sd = sigma)C_obs <- C_pred + epsilon_ij# Create a data frameind_lvl_data <-data.frame(Time = time,Predicted = C_pred,Observed = C_obs)# Compute the upper and lower bounds for the normal distribution around predictionind_lvl_data <- ind_lvl_data |>mutate(Upper = Predicted + sigma,Lower = Predicted - sigma )# Create the ggplotp <-ggplot(ind_lvl_data, aes(x = Time)) +# Shaded area for normal distribution around predictiongeom_ribbon(aes(ymin = Lower, ymax = Upper), fill ="lightblue", alpha =0.5) +# Predicted concentration linegeom_line(aes(y = Predicted), color ="black", size =1) +# Observed data pointsgeom_point(aes(y = Observed), color ="red", size =2) +# Labels and themelabs(title ="Individual level",x ="Time (hours)",y ="Concentration (mg/L)" ) +theme_bw() +# Adjusted annotation for f(x)annotate("text", x =6, y =1.8, label ="f(x): Predicted Concentration", color ="black", hjust =0) +geom_segment(aes(x =7.2, y =1.6, xend =6, yend =0.5),arrow =arrow(length =unit(0.2, "cm")), color ="black") +# Adjusted annotation for Y_ijannotate("text", x =2, y =4.15, label ="Yij: Observed Concentration", color ="red", hjust =0) +geom_segment(aes(x =2, y =4.1, xend =1.1, yend =4.15),arrow =arrow(length =unit(0.2, "cm")), color ="red") +# Adjusted annotation for Residual Variabilityannotate("text", x =1.8, y =0.6, label ="Residual Unexplained Variability (σ)", color ="blue", hjust =0.5) +geom_segment(aes(x =2, y =0.8, xend =3, yend =1),arrow =arrow(length =unit(0.2, "cm")), color ="blue") +# Add a legend manuallyscale_fill_manual(name ="Components",values =c("lightblue"="lightblue"),labels =c("±1σ around Prediction") ) +guides(fill =guide_legend(override.aes =list(alpha =0.5)))+scale_x_continuous(breaks=seq(0,10,2))# Display the plotprint(p)

Figure 7: Illustrative example of observed and predicted concentrations at the individual level, with residual unexplained variability shown as the shaded area around predictions.

This plot shows exemplary shows the predicted and observed concentrations as well as the residual unexplained variability around the prediction. It represents our individual or observation level of the model. This is a simple illustration; typically, datasets would not include negative concentrations and would be rather flagged as below the quantification limit.

5.2 Maximum likelihood estimation

In our case, we have only two parameters to estimate: \(\theta_{TVCL}\) and \(\omega^2\). The overall goal is to infer the parameters of interest \((\hat{\theta}_{TVCL}, \hat{\omega^2})\) from our observed data \(y_{1:n}\). In this case, \(y_{1}\) would denote the vector of \(m_i\) observations for the first individual out of n total individuals. Ideally, we would like to infer the parameters by directly maximizing the complete data log-likelihood (\(\ln L\)) function:

Please note that Equation 3 and Equation 4 represent the complete data log-likelihood, but more to that later in Section 5.2.2. The reason why we deal with log-likelihood is that it makes a lot of calculations a bit easier (e.g., products become sums). Furthermore, likelihood terms can become very small and this can lead to numerical difficulties. By taking the logarithm, we can avoid this issue.

Before we continue, let’s first remind ourselves what likelihood is all about and what is the difference compared to probability.

5.2.1 Likelihood vs probability

I personally see the difference between likelihood and probability as a matter of from which “direction” we are looking at the things. Probability is about looking forward (into the future): “If we have a fully specified model and set of parameters, what are the probabilities of certain future events happening?”. On the other hand, Likelihood is about looking backward: “Now that we have the data / made that particular observation, what is the most likely model or parameters that could have produced it?”

Let’s take a simple example to visualize the likelihood. We assume that the height of a human being is normally distributed and we randomly picked a guy on the streets with a height of 180 cm. Which set of parameters is more likely to lead to this height measurement? A set of parameters that assumes a mean height of 170 cm and a standard deviation of 30 cm or a set of parameters that assumes a mean height of 190 cm and a standard deviation of 5 cm?

Code

# Define the parameter setsparams <-data.frame(parameter_set =c("Mean = 175 cm, SD = 30 cm", "Mean = 190 cm, SD = 5 cm"),mean =c(175, 190),sd =c(30, 5))# Define the range of heights for plottingx_values <-seq(100, 250, by =0.1)# Generate density data for each parameter setdensity_data <- params |>rowwise() |>do(data.frame(parameter_set = .$parameter_set,x = x_values,density =dnorm(x_values, mean = .$mean, sd = .$sd) )) |>ungroup()# Calculate the density (likelihood) at 180 cm for each parameter setlikelihoods <- params |>rowwise() |>mutate(density_at_180 =dnorm(180, mean = mean, sd = sd)) |>select(parameter_set, density_at_180)# Merge the likelihoods with the density data for plottingdensity_data <- density_data |>left_join(likelihoods, by ="parameter_set")# Create the plotggplot(density_data, aes(x = x, y = density)) +geom_line(color ="darkblue") +# Plot the density curvesfacet_wrap(~ parameter_set, ncol =1) +# Create separate panelsgeom_vline(xintercept =180, linetype ="dashed", color ="red") +# Dashed line at 180 cmgeom_point(data = likelihoods, aes(x =180, y = density_at_180), color ="blue", size =2, pch =8) +# Point at 180 cmgeom_text(data = likelihoods,aes(x =180, y = density_at_180,label =sprintf("Likelihood: %.5f", density_at_180)),hjust =1.1, vjust =-0.5, color ="blue") +# Likelihood label on the leftlabs(title ="Likelihood of Observing a Height of 180 cm",x ="Height (cm)",y ="Density") +theme_bw() # Apply black-and-white theme

Figure 8: Comparison of likelihoods for observing a height of 180 cm under two parameter sets: (1) mean = 175 cm, SD = 30 cm and (2) mean = 190 cm, SD = 5 cm.

We can see the parameters \(\mu = 175~cm\) and \(\sigma = 30~cm\) are more likely to have produced the observed height of 180 cm than the alternative set of parameters. The likelihood can be calculated with its respective probability density or probability mass function. More to that later. What becomes clear is that the concept of likelihood fundamentally requires observed data to be meaningful. And this fact leads to an issue when trying to calculate the joint likelihood for our NLME model.

5.2.2 The problem with the joint likelihood

We are trying to estimate the joint log-likelihood \(\ln L\left(\theta_{TVCL}, \omega^2| y_{1:n}, \eta_{i:n}\right)\), which is the likelihood of the parameters \(\theta_{TVCL}\) and \(\omega^2\) given that we have observed \(y_{1:n}\) and \(\eta_{i:n}\). Now we have a problem. While we directly observe \(y_{1:n}\), we do not observe \(\eta_i\) (or its respective \(CL_i\)) values directly. In other words: You will never receive a dataset where you have an “observed” individual clearance or an “observed” individual random effect parameter. This is why \(\eta_i\) can be called an unobserved latent variable. Previously we have defined the complete data log-likelihood in Equation 3 and Equation 4, which is the log-likelihood of both the observed data and the unobserved latent variables. But without observations for \(\eta_{i:n}\) we cannot compute the complete data likelihood.

Now what? Our approach would be to somehow get rid of the general dependence on \(\eta_i\). This is where the so-called marginal likelihood comes into play, which does not longer depend on an \(\eta_i\) observation. In our case the maximum likelihood estimates are based on the marginal likelihood:

Please note that we do not depend on an actual \(\eta_{i:n}\) observation anymore, only on \(y_{1:n}\). To set up the actual equation let’s first rewrite the Likelihood as a probability:

For each individual, we have \(m_i\) observations and \(y_{i}\) represents a vector of these \(m_i\) observations. We can also write it more explicitly to avoid misunderstandings, again assuming independence across observations.

To align the likelihood expression with the structure of our model in Equation 1 and Equation 2, we need to reformulate Equation 8 to explicitly include the individual random effects \(\eta_i\). This allows us to express the likelihood in terms of both the individual-level and population-level components, with which we can actually do calculations. In other words: Equation 8 does not yet reflect the specific hierarchical form of our model. By reformulating the likelihood (see next steps), we transform this generic form into a structure that directly incorporates our model’s individual and population components and allows us to use our previously specified model.

In this first step, we are integrating over all possible values of \(\eta_i\) (since we can’t directly observe \(\eta_i\)). We can now further split this marginal likelihood equation by using the chain rule of probability, which brings us closer to the population and individual level structure of our model and closer to a state where we can use the model:

As \(p(y_{ij}| \eta_i; \theta_{TVCL}, \omega^2)\) does not actually depend on \(\omega^2\), and \(p(\eta_i | \theta_{TVCL}, \omega^2)\) does not actually depend on \(\theta_{TVCL}\), we can then simplify the equation to:

The integral now consists of two parts: The individual level term \(p(y_{ij}| \eta_i; \theta_{TVCL})\) and the population level term \(p(\eta_i | \omega^2)\). The intuition behind this can be seen as something like this: For a given \(\eta_i\) within the integral, the population term \(p(\eta_i |\omega^2)\) tells us how likely it is to observe this particular \(\eta_i\) value in the population. The individual term \(p(y_{ij}| \eta_i; \theta_{TVCL})\) on the other hand tells us how likely it is to observe the \(j^{th}\) observation of the \(i^{th}\) individual given that particular \(\eta_i\) value. The Likelihood will be maximal when the product of both terms is maximal, so that it is very likely to have this particular \(\eta_i\) value in the population and that it is very likely to observe the set of \(y_{ij}\) values given this \(\eta_i\) value.

So is it all good now? Kind of. We got rid of the dependence on directly observing \(\eta_i\) for the complete data log-likelihood and have instead a nice marginal likelihood equation. However, solving the marginal likelihood is much harder due to this complex integral. So the next important task is to find a way to deal with this integral. And if it is hard to calculate, why not just approximate it?

5.3 Approximating the integral

5.3.1 Laplacian approximation

So far we’ve understood that we cannot solve the integral in the marginal likelihood equation easily. I am not sure if it is impossible or if it is just very hard to do so. But either way, in reality we need to approximate it somehow. To simplify things a bit, we will focus on the individual Likelihood \(L_i\) for now (for one single individual i) to avoid writing out the product (for the population) every time:

In the end, we have to take the product of all individual Likelihoods to get the likelihood for the population. Now we are going to tackle the integral. Apparently, one way of approximating integrals is the Laplacian approximation. It is a method which simplifies difficult integrals by focusing on the most important part (contribution-wise) of the function being integrated, which is the point where the function reaches its peak (the maximum or mode of the function). The idea is to approximate the function around this maximum point by a so-called second-order Taylor expansion. This approximation assumes that the integrand behaves like a Gaussian (bell-shaped) function around its maximum. The Taylor expansion with different orders (n = 1/2/3/10) is visualized here (2024):

Figure 9: Visualization of second-order Taylor expansions (n=1,2,3,10) around the logarithmic function ln(x).

Now, there seems to exist an useful feature of integrals that they often become analytically solvable if they take a certain form. If we can express the integral in the following exponential form:

\[\int{\exp(f(x))~dx} \tag{13}\]

where \(f(x)\) is a second-order polynomial of the form \(f(x) = ax^2 + bx + c\) with a negative quadratic coefficient (\(a < 0\)), and \(f(x)\) is twice differentiable with a unique maximum, we can solve it analytically. Therefore, the next step would be to bring our integral into this form, so we can make use of this nice property to kick out the annoying integral. That would allow us to get rid of any integration and just directly deal with the analytical solution.

5.3.2 Bringing the expression in the right form

To bring our Likelihood expression into the right form, we can use a \(\exp(\ln())\) operator. In total this won’t do anything, but it allows us to simplify our inner expression a little, as products turn into sums due to the logarithm. And (as mentioned above), we need the exponential form of Equation 13 to solve the integral analytically. Thus, we take Equation 12 and apply the \(\exp(\ln())\) operator, which leads to a re-expression of the individual Likelihood as:

We have successfully brought our expression into a format which allows us to later get rid of the integral itself. Please note the similarity between Equation 13 and Equation 17. In a next step we want to approximate \(g_i(\eta_i)\) as a Gaussian (second-order polynomial) function around its maximum point (mode) \(\eta_i^*\) via a Taylor expansion.

5.3.3 Taylor expansion

Okay, let’s approximate \(g_i(\eta_i)\) by a second order Taylor expansion of \(g_i(\eta_i)\) at point \(\eta_i^*\), as we cannot explicitly and directly calculate that integral1. A second-order Taylor expansion at point \(\eta_i^*\) is given by the following expression:

During Laplacian estimation, we want to choose \(\eta_i^*\) so that we are in the mode of \(g_i\). First of all, around this point we have the biggest contribution to the integral. Since a Taylor approximation is always most accurate at the point for which we are expanding it, it makes sense that this should be the point at which the integral is the most sensitive to. Second, the mode is the point where the first derivative (\(g_i'(\eta_i^*)\)) is zero. Therefore, it allows us to drop the second term of the Taylor expansion:

Great! Now we have approximated the complex expression \(g_i(\eta_i)\) and can now try to get rid of the integral in a next step.

5.3.4 Kicking out the integral

Okay, so the first thing we do is to take Equation 17 and substitute \(g_i(\eta_i)\) with the 2nd-order approximation we obtained via Equation 20. This gives us:

Our first goal is to isolate expressions which are dependent on \(\eta_i\) and those which are not. This will later allow us to get rid of the integral. The term \(g_i(\eta_i^*)\) is independent on \(\eta_i\) (it is just being evaluated at the mode \(\eta_i^*\)), while the term \(\frac{1}{2} g_i''(\eta_i^*)(\eta_i-\eta_i^*)^2\) is actually dependent on \(\eta_i\). Therefore, we are now going to split the expression into two parts:

Now in order that our plan (kicking out the integral) works, we need to ensure that the exponent of the second term is negative. This is important, because we want to approximate the integral as a Gaussian function, which is only possible if this exponent is negative. Technically, we can be sure that this is the case, because we are expanding around the mode and the second derivative at this point is negative for our function. However, since I want to align more with the reference solution in Wang (2007), I re-write the expression in a way that makes this more explicit:

We just introduced a negative sign and took the absolute value of the second derivative. Now we can substitute \(g_i(\eta_i^*)\) with the respective expression given in Equation 18 (and evaluated at the mode \(\eta_i^*\)) and get:

Now the term \(\exp\left(\left(\sum_{j=1}^{m_i} \ln \left(p(y_{ij}| \eta_i^*; \theta_{TVCL})\right)\right) + \ln \left(p(\eta_i^* | \omega^2)\right)\right)\) does not depend anymore on \(\eta_i\) (over which we are integrating), since the expression is just evaluated at a given value of \(\eta_i^*\). This means it is a constant and can be factored out of the integral:

Remember the trick we have used to bring the integral in the right form? This operator \(\exp(\ln())\) is not needed for the term which has been factored out, so we can simplify it to \(\left(\prod_{j=1}^{m_i} p(y_{ij}| \eta_i^*; \theta_{TVCL})\right) \cdot p(\eta_i^* | \omega^2)\). Please note, that the summation becomes a product again as we transform to the normal domain:

Now the whole plan of those people, who came up with this derivation, works out: The remaining integral is the integral of a Gaussian function and can be solved analytically. We now shortly just focus on the integral part of Equation 27:

Finally the magic happens. With all the work we have invested we can finally harvest the fruits. The integral disappears and we are left with a simple expression.

To my understanding we have now achieved an important goal by turning a hard-to calculate integral into an analytical expression, which is easier to evaluate. The only challenge remaining is the calculation of the second derivative \(g_i''(\eta_i^*)\), which is not very straightforward. Substituting the simplified integral part from Equation 34 back into our individual likelihood expression (Equation 27), we get:

Also here, we need to be careful. As mentioned above, \(g_i''(\eta_i^*)\) is expected to be negative, since the function is concave and has a negative curvature at their maximum. I saw in Wang (2007) that they write \(-g_i''(\eta_i^*)\), however, this should be the same as \(|g_i''(\eta_i^*)|\) and I am going to stick to the absolute value out of convenience and because I find it a bit easier to follow.

Now we can move a step further and translate it into something which is more familiar to us: The objective function in NONMEM. In the next section, we are going to spell out the missing expressions and also define the actual equation for the second derivative \(g_i''(\eta_i^*)\).

5.4 Defining the Objective Function

5.4.1 General

Okay, so our next task is to define the objective function in NONMEM. We know that NONMEM calculates the -2 log likelihood (Bauer 2020). To my understanding the main reason to use the log of the Likelihood is to make it numerically more stable and the main reason to take the -2 is to make it asymptotically chi-square distributed (and thus, it allows some statistical testing). The negative sign turns our maximization problem into a minimization problem, which is mathematically easier to solve. The -2 log likelihood for a single individual is defined as:

For the sake of simplicity we are still focusing on a single individual \(i\) and its contribution to the objective function. In the end, we would have to sum up the individual contributions to the objective function \(OF_i\) to get the final objective function. When we substitute the individual likelihood from Equation 35 into Equation 36, we get:

From here on it makes sense to split up the terms in order to better understand what is going on. We can identify three terms in the expression given by Equation 39:

First term: \(\left(\sum_{j=1}^{m_i} -2 \ln\left(p(y_{ij}| \eta_i^*; \theta_{TVCL})\right)\right)\)

Second term: \(-2 \ln \left(p(\eta_i^*|\omega^2)\right)\)

Third term: \(-2 \ln \left(\sqrt{\frac{2\pi}{\left| g_i''(\eta_i^*)\right|}}\right)\)

Let’s spell out each of these terms in more detail and see how they can be calculated.

5.4.2 Term 1

Let’s tackle the first term of Equation 39. It is given by:

The question we are asking is: “How likely is it to observe a certain set of data points \(y_i\) (= a series of observations for an individual) given the individual (most likely) parameter \(\eta_i^*\) and \(\theta_{TVCL}\)?” Please note: It seems that this expression is independent of \(\omega^2\), but it is not. Our most likely \(\eta_i^*\) is the mode of the distribution characterized by \(\omega^2\), so we have a hidden dependence here. Typically, the our first term would also involve the residual unexplained variance (RUV) given by \(\sigma^2\), but in our simple example it is fixed to a certain variance and thus, we don’t have to estimate it.

We assume a normal distribution (given by the RUV) around our model predictions \(f(x_{ij}; \theta_i)\). Please refer to Figure 7 for a refresher. Now we can illustrate this concept for one single data point of a single individual (e.g., after 10 h a concentration of 2 mg/L was measured) as a case example to understand the concept a bit better:

Code

# Set seed for reproducibility (optional)set.seed(123)# Define fixed parametersDose <-100# Dose administeredV_D <-10# Volume of distributioneta_i <-0# Individual random effectsigma <-sqrt(0.1) # Residual unexplained variance (standard deviation)# Define population parameters (Theta_TVCL)Theta_TVCL_values <-c(10, 15, 20)# Define fixed time point (t)t <-1# You can change this as needed# Compute the structural model predictions for each Theta_TVCL# Using the formula: C(t) = (Dose / V_D) * exp(-Theta_TVCL / V_D * t)model_predictions <-data.frame(Theta_TVCL = Theta_TVCL_values,C_t = (Dose / V_D) *exp(-Theta_TVCL_values / V_D * t))# Define the observed data point y_iy_i <-2# Create a sequence of y values for plotting the PDFsy_values <-seq(min(model_predictions$C_t) -3*sigma, max(model_predictions$C_t) +3*sigma, length.out =1000)# Create a data frame with density values for each Theta_TVCLdensity_data <- model_predictions |>rowwise() |>do(data.frame(Theta_TVCL = .$Theta_TVCL,y = y_values,density =dnorm(y_values, mean = .$C_t, sd = sigma) )) |>ungroup()# Calculate the likelihood and -2 log likelihood for the observed y_ilikelihood_data <- model_predictions |>mutate(likelihood =dnorm(y_i, mean = C_t, sd = sigma),neg2_log_likelihood =-2*log(likelihood) )# Merge likelihood data with density_data for annotation purposesdensity_data <- density_data |>left_join(likelihood_data, by ="Theta_TVCL")# Create a named vector for custom facet labelsfacet_labels <-setNames(paste("TVCL =", Theta_TVCL_values), Theta_TVCL_values)# Start plottingggplot(density_data, aes(x = y, y = density)) +# Plot the density curvesgeom_line(color ="blue", size =1) +# Add a vertical line for the observed data point y_igeom_vline(xintercept = y_i, linetype ="dashed", color ="red") +# Facet the plot by Theta_TVCLfacet_wrap(~ Theta_TVCL, scales ="free_y", labeller =as_labeller(facet_labels), nrow=3) +# Add annotations for the likelihood and -2 log likelihoodgeom_text(data = density_data |>distinct(Theta_TVCL, likelihood, neg2_log_likelihood),aes(x =Inf, y =Inf, label =paste0("Likelihood: ", round(likelihood, 6),"\n-2 ln(L): ", round(neg2_log_likelihood, 3))),hjust =1.1, vjust =1.1, size =4, color ="black") +# Customize labels and themelabs(title ="Likelihood for different TVCL values",subtitle =paste("Observed data point yi =", y_i, ", eta_i* = 0"),x ="Concentration (C(t))",y ="Probability Density" ) +theme_bw() +scale_y_continuous(limits=c(NA, 2))+theme(strip.text =element_text(size =12, face ="bold"),plot.title =element_text(size =14, face ="bold"),plot.subtitle =element_text(size =12) )

Figure 10: Likelihood of observing a concentration of 2 mg/L at different typical clearance values.

For simplicity we have assumed an \(\eta_i^*\) of 0 to generate this plot. We can see that we have observed a concentration of 2 mg/L at a given time point and want to know how likely it is to observe this particular concentration given different values of \(\theta_{TVCL}\) with a fixed \(\eta_i^*\). It becomes apparent that it is much more likely to have a clearance of 15 L/h given our data than to have a clearance of 10 or 20 L/h. But how can we explicitly calculate that likelihood given by Equation 40?

Since we deal with a normal distribution, the likelihood is given by its probability density function (PDF). The general form is given by:

Great! If someone would give us a \(\theta_{TVCL}\) value and an \(\eta_i^*\) value, we would be able to calculate the -2 log likelihood term for that particular data point. Actually, we would need to have the model prediction function at hand for this (or an ODE-solver). The model prediction \(f(x_{ij}, \theta_{TVCL}, \eta_i^*)\) was already defined in Equation 69 (see above). It will be used at various points of the final objective function.

5.4.3 Term 2

A very similar concept applies to the second term of Equation 39. It is given by:

We typically assume that \(\eta_i\) is normally distributed with a mean of 0 with a variance \(\omega^2\). We could do a similar illustration as above, where we have a look at a certain \(\eta_i^*\) value and want to know how likely it is to observe this value given the population variance \(\omega^2\) (which we have at the moment of evaluation):

Code

# Set seed for reproducibility (optional)set.seed(123)# Define fixed parameterseta_i_star <-0.3# Observed eta_i valueomega_squared_values <-c(0.01, 0.2, 0.5) # Population variances# Define the mean for eta_i (assumed to be 0)mu_eta <-0# Compute the structural model predictions for each omega^2# Using the formula: eta_i ~ N(0, omega^2)model_predictions <-data.frame(omega_squared = omega_squared_values,mean = mu_eta)# Create a sequence of eta values for plotting the PDFs# Extending the range to cover the tails adequatelyeta_values <-seq( mu_eta -4*sqrt(max(omega_squared_values)), mu_eta +4*sqrt(max(omega_squared_values)), length.out =1000)# Create a data frame with density values for each omega^2density_data <- model_predictions |>rowwise() |>do(data.frame(omega_squared = .$omega_squared,eta = eta_values,density =dnorm(eta_values, mean = .$mean, sd =sqrt(.$omega_squared)) )) |>ungroup()# Calculate the likelihood and -2 log likelihood for the observed eta_i*likelihood_data <- model_predictions |>mutate(likelihood =dnorm(eta_i_star, mean = mean, sd =sqrt(omega_squared)),neg2_log_likelihood =-2*log(likelihood) )# Merge likelihood data with density_data for annotation purposesdensity_data <- density_data |>left_join(likelihood_data, by ="omega_squared")# Create a named vector for custom facet labelsfacet_labels <-setNames(paste("ω² =", omega_squared_values), omega_squared_values)# Start plottingggplot(density_data, aes(x = eta, y = density)) +# Plot the density curvesgeom_line(color ="blue", size =1) +# Add a vertical line for the observed eta_i*geom_vline(xintercept = eta_i_star, linetype ="dashed", color ="red") +# Highlight the point (eta_i*, density at eta_i*)geom_point(data = density_data |>filter(abs(eta - eta_i_star) <1e-6), aes(x = eta, y = density), color ="darkgreen", size =3 ) +# Facet the plot by omega_squared with custom labelsfacet_wrap(~ omega_squared, scales ="free_y",labeller =as_labeller(facet_labels),nrow=3 ) +# Add annotations for the likelihood and -2 log likelihoodgeom_text(data = likelihood_data,aes(x =Inf, y =Inf, label =paste0("Likelihood: ", round(likelihood, 4),"\n-2 log(L): ", round(neg2_log_likelihood, 2) ) ),hjust =1.1, vjust =1.1, size =4, color ="black" ) +# Customize labels and themelabs(title ="Likelihood term for different ω² values",subtitle =paste("etai* =", eta_i_star),x =expression(eta),y ="Probability Density" ) +theme_bw() +scale_y_continuous(limits=c(NA, 4.5))+theme(strip.text =element_text(size =12, face ="bold"),plot.title =element_text(size =14, face ="bold"),plot.subtitle =element_text(size =12) )

Figure 11: Likelihood of observing ETA=0.3 for different variances of the inter-individual variability term.

In our little example we can observe that the likelihood of “observing”2\(\eta_i^* = 0.3\) is much higher when the IIV variance is 0.2 compared to 0.01 or 0.5. This is the information we want to capture with this second likelihood term. Similarly to the first term of Equation 39, we are applying the general form of the pdf (Equation 41), which leads to this expression:

Please note that the normal distribution is centered around 0 (\(\mu = 0\)), which is in contrast to the individual level where it was centered around the predicted value \(f(x_{ij}, \theta_{TVCL}, \eta_i^*)\) (see Equation 42). Because of \(\mu = 0\), we can simplify this to:

Very good. We have our population-level likelihood term. Similar to Term 1, we are now able to calculate this expression if someone would give us a value for \(\eta_i^*\) and \(\omega^2\). The remaining term is part 3 of the objective function, which is a bit more tricky. Let’s move on to this term.

5.4.4 Term 3

The derivative term is the third and last term of Equation 39 and is given by

We still need to find a way to calculate the second derivative of the individual model function \(g_i(\eta_i^*)\). However, defining the second derivative \(g_i''(\eta_i^*)\) evaluated at \(\eta_i^*\) is not straightforward and in many cases (once we deal with more complex models), this would be approximated numerically (e.g., using finite difference methods). We have luckily chosen a very simple model and can actually calculate the second derivative of the closed form expression. However, it is still quite complicated and cumbersome (at least to me). Therefore, we will simply use WolframAlpha / Mathematica to find these derivatives and help us out with the derivation rules.

The function \(g_i(\eta_i)\) was already defined in Equation 18. Given that, the second derivative is expressed as the second derivatives of its two terms:

It doesn’t matter whether we differentiate each term of a sum individually and then add the results, or add the terms first and then differentiate the sum as a whole. The result remain the same due to the linearity property of differentiation. For this reason, we first define the second derivative of a single term (by ignoring the sum). Our expression for \(\ln \left(p(y_{ij}| \eta_i^*; \theta_{TVCL})\right)\) is given in Equation 43.

According to WolframAlpha, the second derivative of Equation 43 is given by:

The closed form expression for the model prediction function is defined in Equation 69. However, we haven’t defined its first and second derivative yet. Similar, we are going to use WolframAlpha to define these derivatives:

which depends on our previous definitions for \(f'(x_{ij}, \theta_{TVCL}, \eta_i^*)\) in Equation 59 and \(f''(x_{ij}, \theta_{TVCL}, \eta_i^*)\) in Equation 60.

Now we have to pluck our results back into Equation 54 to finalize term 3:

Great! We have found the final expression for the second derivative of \(g_i(\eta_i^*)\).

5.4.5 Defining \(\eta_i^*\)

Our first task is to find the particular value of \(\eta_i\) which maximizes the expression in Equation 18. This should be nothing else than a Bayesian maximum a-posteriori (MAP) estimation (or empirical Bayes estimation (EBE)). We can find the mode of \(g_i(\eta_i)\) by:

This means that we are searching over all possible values for \(\eta_i\) (per individual) and try to find the value that maximizes our log likelihood function. We have already previously defined \(\ln\left(p(y_{ij} | \eta_i; \theta_{TVCL})\right)\) and \(ln\left(p(\eta_i|\omega^2)\right)\) in Equation 43 and Equation 49, respectively3. We can substitute these expressions into Equation 65:

Most numerical optimization algorithms are designed to minimize functions, because it is conceptually simpler to identify a minimum than a maximum. As we will later reproduce this function in R, we are already now turning the maximization problem into a minimization problem by negation:

In our simple example, we luckily do not need to rely on ODE solvers (as we have an analytical solution at hand) and can later replace \(f(x_{ij}, \theta_{TVCL}, \eta_i)\) with the closed form expression of a 1 cmt iv bolus model:

Equation 68 needs to be numerically optimized for each individual at each iteration of the algorithm. This is typically done by using a numerical optimization algorithm. The optimization algorithm will search the parameter space to numerically find the value of \(\eta_i^*\) that maximizes the function.

5.4.6 Putting the pieces together

Previously, we have taken the three pieces of Equation 39 and expressed them in an explicit way. Let’s put them together. After simplification, our objective function is represented by:

It seems that NONMEM ignores all constants during the optimization process (Bauer (2020) and Wang (2007)), which is why we can get rid of the \(\ln(2\pi)\) terms. We can simplify to:

Please note that the objective function \(OF_i\) is a function with multiple input parameters. Technically, we would have to write something like this:

to clearly state the dependencies. However, for the sake of readability we are simply denoting it \(OF_i\). The last step would be now to calculate the objective function for the whole population, including n individuals:

\[OF = \sum_{i=1}^{n} OF_i \tag{76}\]

Very good. We have now set up the final equation for our objective function and we can now reproduce these function in R!

6 Reproduction of NONMEM’s iterations

6.1 Function definitions

In order to reproduce the iteration of the NONMEM algorithm, we need to define a couple of functions inside R. Technically, we need all equations which are part of Equation 75. In the next steps we are going to define these functions in R.

6.1.1 Structural model function and its derivatives

The first function we are defining in R is Equation 69, which gives us a prediction for a given \(x_{ij}, \theta_{TVCL}, \eta_i\) based on our structural model.

function: f_pred()

# Define structural model prediction functionf_pred <-function(eta_i, dose, vd, theta_tvcl, t) { exp_eta_i <-exp(eta_i) exponent <--1* (theta_tvcl * exp_eta_i / vd) * t result <- (dose / vd) *exp(exponent)return(result)}

We also have to define the derivatives of f_pred(). This is the definition of the first derivative function (reproduction of Equation 59):

function: f_prime()

# Define the first derivative of the structural model prediction functionf_prime <-function(eta_i, dose, vd, theta_tvcl, t) { term <- (theta_tvcl * t *exp(eta_i)) / vd derivative <--term *f_pred(eta_i, dose, vd, theta_tvcl, t)return(derivative)}

This is the definition of the second derivative function (reproduction of Equation 60):

function: f_double_prime()

# Define the second derivative of the structural model prediction functionf_double_prime <-function(eta_i, dose, vd, theta_tvcl, t) { term <- (theta_tvcl * t *exp(eta_i)) / vd second_derivative <- term * (term -1) *f_pred(eta_i, dose, vd, theta_tvcl, t)return(second_derivative)}

We now need to pay attention what R is actually returning in these functions. For all individuals we have multiple data points, so t is going to be a vector. This will propagate then throughout the calculations and the results/derivative/second_derivative object, which is being returned, will also be a vector.

6.1.2 Function to find the mode \(\eta_i^*\)

Now we need to define two functions to reproduce Equation 68. Since finding the mode \(\eta_i^*\) is an optimization problem, we need to define it’s own objective function for which we are going to numerically solve for the maximum:

Since our optimizer function (optim) will actually minimize the objective function, we already previously turned the objective function into a minimization problem by negation (see Section 5.4.5). In the end it doesn’t matter if we maximize the positive version of the term or if we minimize the negative version given by Equation 68. The actual optimizer is then being encoded in a separate function and takes as an argument the MAP objective function we have defined earlier:

Please note that the compute_eta_i_star() function takes as arguments the functions which we have defined prior to that: f_pred() and obj_fun_eta_i_star(). Another hint: We need to find \(\eta_i^*\) for each individual. That’s why we’ll apply our function compute_eta_i_star() on an individual level and it will return a single value of \(\eta_i^*\). Please note that each individual has multiple observations and therefore obj_fun_eta_i_star() calculates the sum of the squared residual terms.

6.1.3 Second derivative function

Now we need to define an R function for the second derivative \(g_i''(\eta_i)\), which is given by Equation 64. Again, we need to take the sum of the likelihood term to get a single value for each individual with multiple observations. Even with multiple observations, the prior term is only being accounted for once. This explains why with a larger number of observations the likelihood is going to dominate the prior.

function: gi_double_prime()

# Define second derivative of gigi_double_prime <-function(eta_i, y_i, t, theta_tvcl, vd, dose, sigma2, omega2) {# Calculate f_pred, f_prime, and f_double_prime for all time points f_pred_val <-f_pred(eta_i, dose, vd, theta_tvcl, t) f_prime_val <-f_prime(eta_i, dose, vd, theta_tvcl, t) f_double_prime_val <-f_double_prime(eta_i, dose, vd, theta_tvcl, t)# Compute the numerator for each observation numerator <- (-f_prime_val^2+ (y_i - f_pred_val) * f_double_prime_val)# Divide by sigma^2 for each observation term <- numerator / sigma2# Sum the terms across all observations for the individual sum_term <-sum(term)# Subtract the term 1 / omega^2 second_derivative <- sum_term - (1/ omega2)# return the second derivativereturn(second_derivative)}

From here on, we only need to define one last function: the objective function, which brings all the pieces together.

6.1.4 Objective function

When defining the objective function (see Equation 75), we are going to rely on all other functions defined above. Please note, that the ofv_function defined below will calculate the objective function value for a single individual. Later on we will have to loop over all individuals and sum up the OFV as defined in Equation 76.

function: ofv_function()

# Define the objective function OFVofv_function <-function(y_i, t, theta_tvcl, vd, dose, sigma2, omega2, f_pred, gi_double_prime, compute_eta_i_star, obj_fun_eta_i_star, eta_i_init =0, return_val ="ofv") {# Compute eta_i_star eta_i_star <-compute_eta_i_star(y_i = y_i,t = t,theta_tvcl = theta_tvcl,vd = vd,dose = dose,sigma2 = sigma2,omega2 = omega2,f_pred = f_pred,eta_i_init = eta_i_init,obj_fun_eta_i_star = obj_fun_eta_i_star )# Compute f(eta_i_star) f_pred_value <-f_pred(eta_i_star, dose, vd, theta_tvcl, t)# Compute residual term residual <- y_i - f_pred_value residual_squared <- residual^2# Compute the first term: ln(sigma^2) term1 <-length(y_i) *log(sigma2)# Compute the second term: (y_i - f(eta_i_star))^2 / sigma2 term2 <-sum(residual_squared) / sigma2# Compute the third term: ln(omega^2) term3 <-log(omega2)# Compute the fourth term: (eta_i_star)^2 / omega2 term4 <- (eta_i_star^2) / omega2# Compute gi''(eta_i_star) gi_double_prime_value <-gi_double_prime(eta_i = eta_i_star,y_i = y_i,t = t,theta_tvcl = theta_tvcl,vd = vd,dose = dose,sigma2 = sigma2,omega2 = omega2 )# Compute term5: ln of absolute value of gi_double_prime_value term5 <-log(abs(gi_double_prime_value))# Sum all terms to compute the objective function value ofv <- term1 + term2 + term3 + term4 + term5# return by default ofv, but we can also request to return the eta_i_starif(return_val =="ofv"){return(ofv) } elseif(return_val =="eta_i_star"){return(eta_i_star) }}

Great! Now we are all set to reproduce the objective function values for each iteration.

6.2 Reproducing OFVs for iterations

Let’s start with a little recap. Our simulated concentration-time data was given by this data frame:

Code

# show simulated datasim_data_reduced |>select(ID, TIME, DV) |>head() |>mytbl()

ID

TIME

DV

1

0.01

30.8160

1

3.00

24.5520

1

6.00

17.9250

1

12.00

10.1100

1

24.00

3.5975

2

0.01

31.4550

The following .ext file contained the information about the objective function values, which we are trying to reproduce in this chapter:

Code

# show first rowext_file |>mytbl()

ITERATION

CL

V

RUV_VAR

IIV_VAR

OBJ

0

0.100000

3.15

0.1

0.1500000

41.845437

1

0.168370

3.15